Scientific Inquirer: Why Searching for Truth Online Might Be Making Us More Biased

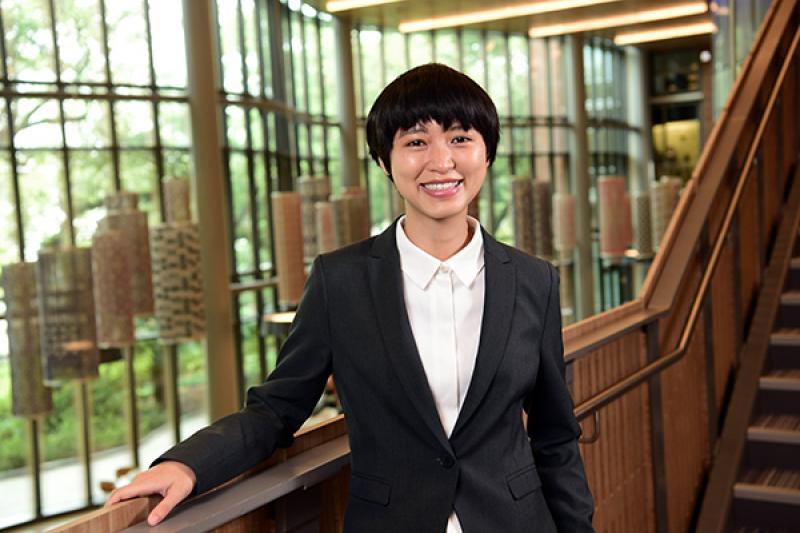

Eugina Leung, assistant professor of marketing, was interviewed by Scientific Inquirer about her research finding that search engine users tend to reinforce their own beliefs and biases as a result of the search terms they use.

“Search engines like Google or ChatGPT are engineered to deliver the most relevant results for the specific words you used. So, a search for ‘dangers of caffeine’ will return a list of articles about its negative effects. The algorithm is doing its job perfectly, but the result is a narrow slice of information that matches the bias in your original query.”

To read the article in its entirety, visit scientificinquirer.com:

Interested in advancing your education and/or career? Learn more about Freeman’s wide range of graduate and undergraduate programs. Find the right program for you.

Other Related Articles

- Politico: Trump administration moves closer to opening Venezuela to more US oil producers

- Deseret News: Californians are asking, is it time to raise taxes on the rich?

- Quartz: What it would take for Trump to drag Big Oil back into Venezuela

- Harvard Business Review: Why AI Boosts Creativity for Some Employees but Not Others

- Forbes: How To Talk Politics With Family Over The Holiday

- AI-powered fund takes top prize in Aaron Selber Jr. Hedge Fund Course

- De Franco appointed Keehn Berry Chair of Banking and Finance

- The Wall Street Journal: For Trump, the Warner Megadeal Talks Are All About CNN