CNET: The Scientific Reason Why ChatGPT Leads You Down Rabbit Holes

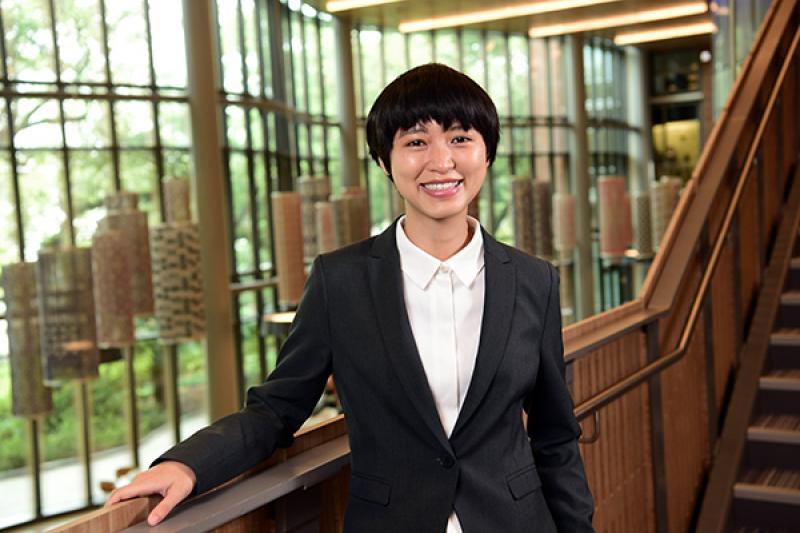

Eugina Leung, assistant professor of marketing, was interviewed by CNET for a story about her research that search engines tend to reinforce users' existing beliefs due to the search terms they're using.

"When people look up information, whether it's Google or ChatGPT, they actually use search terms that reflect what they already believe," Eugina Leung, an assistant professor at Tulane University and lead author of the study, told me.

To read the story in its entirety, visit cnet.com:

Interested in advancing your education and/or career? Learn more about Freeman’s wide range of graduate and undergraduate programs. Find the right program for you.

Other Related Articles

- Deseret News: Californians are asking, is it time to raise taxes on the rich?

- Harvard Business Review: Why AI Boosts Creativity for Some Employees but Not Others

- NBC News: Elon Musk has left the White House, but he hasn't left politics behind on X

- Research Notes: Yang Pan

- Freeman Futurist Series looks at AI, Robotics and Quantum

- Quartz: Companies that replace workers with AI ‘risk mediocrity,’ expert warns

- BBC News: ChatGPT will soon allow erotica for verified adults, says OpenAI boss

- Embracing Business Futurism: A Conversation with Cliff Farrah